Background

Tintin — © Hergé / Moulinsart 2017

As described in the About section, Vincent grew up reading and admiring the 'ligne claire' of Hergé and other european graphic novel masters in addition to European and Japanese art. He later learned that some of their work was inspired by Japanese art (in particular Hergé, with the influence of Hiroshige)'s woodblock prints.

While Vincent started from the engineering side of graphics, with 2D computer graphics, he has been drawn to the creative side. Vincent has learned visual arts and design, starting with 2D, then focusing on 3D art and now exploring how to use generative Artificial Intelligence (AI) features purposefully.

Today, Vincent mixes coding, 2D and 3D tools depending on the project. He increasingly focuses on animated and interactive experiences, which makes coding more central and his interest in web technologies more important.

Coding

Vincent has been coding since he studied computer science and learned object-oriented programming. Starting with C, he then then learned C++, Java, Python and later focused on web technologies, in particular JavaScript, SVG, CSS and HTML.

Today, Vincent mostly use the following languages:

- JavaScript, SVG, CSS and HTML for interactive, graphical web experiences. He is planning to use WebGL and hopefully WebGPU more and more in the future.

- Python for scripting in Cinema 4D, allowing him to create geometry and animation from code and data.

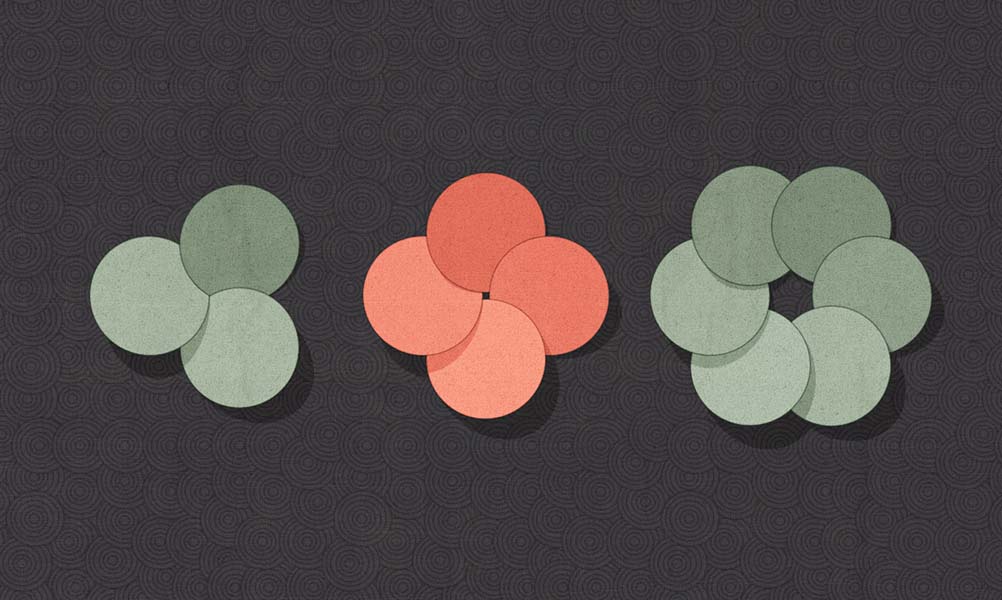

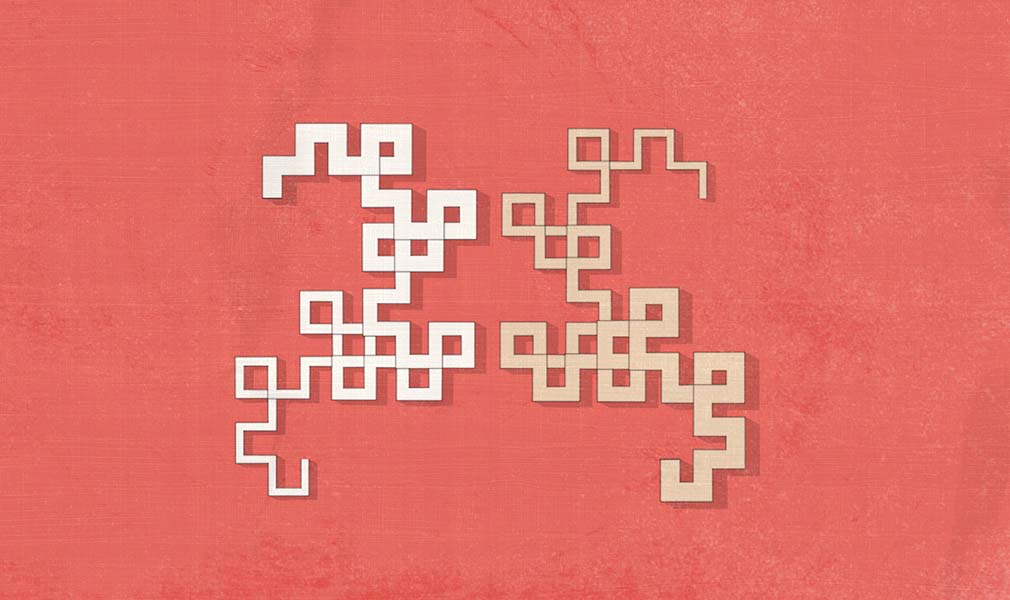

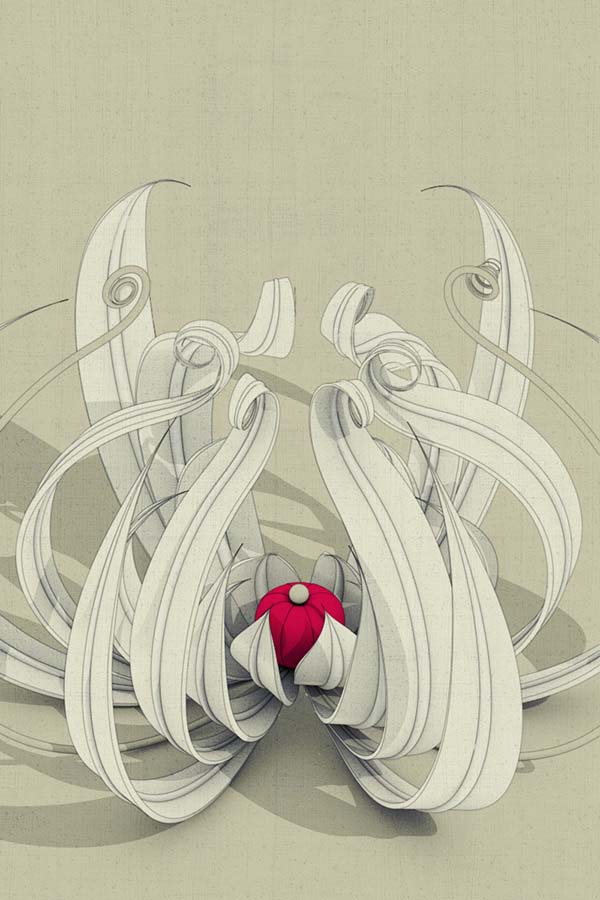

3D Work

The reason for gravitating to 3D art is the cheer power of 3D tools such as Maxon's Cinema 4D, which let you create whole worlds and complex scenes with comparatively limited efforts. These tools also allow you mind blowing flexibility when compared to other solutions (certainly traditional media, but also 2D graphical environments). For example, if you do not like the angle of an image towards a building, you can always change the point of view and the camera angle. In other solutions, you have to essentially redraw and recreate the new scene, hoping it will meet your expectations.

Despite his drive towards 3D tooling, Vincent still has the same passion for 2D rendering and the type of woodblock prints mentioned earlier.

After learning to use Cinema 4D, Vincent turned his efforts more specifically to rendering and trying to achieve renditions that are more reminiscent of traditional 2D art.

You can find more of his work on Behance. Here are a few examples.

Japanese crests

Abstract

Moonlight

Abstract plant

Generative Artificial Intelligence (AI) work

In mid-2022, Vincent started to learn more about generative AI work. You can see his experimentation in that field on his Instagram account. The following describes what his process of using Artifical Intelligence is.

AI Tools and Process

There are several players in the Artificial Intelligence generative art field. Vincent has experiemented most with three of them:

An example of using generative Artificial Intelligence

Let's look at a real, concrete example: an art deco portrait Vincent created with AI tools. The following figure illustrates the three main steps.

- Step 1 – Raw image generated by MidJourney (left)

- Step 2 – Image touched up with DALL.E (centered)

- Step 3 – Image after compositing and texturing in Photoshop

Steps are described below in more details.

WORKING WITH AI TOOLS AND PHOTOSHOP

MidJourney : Creative Powerhouse (with imperfections)

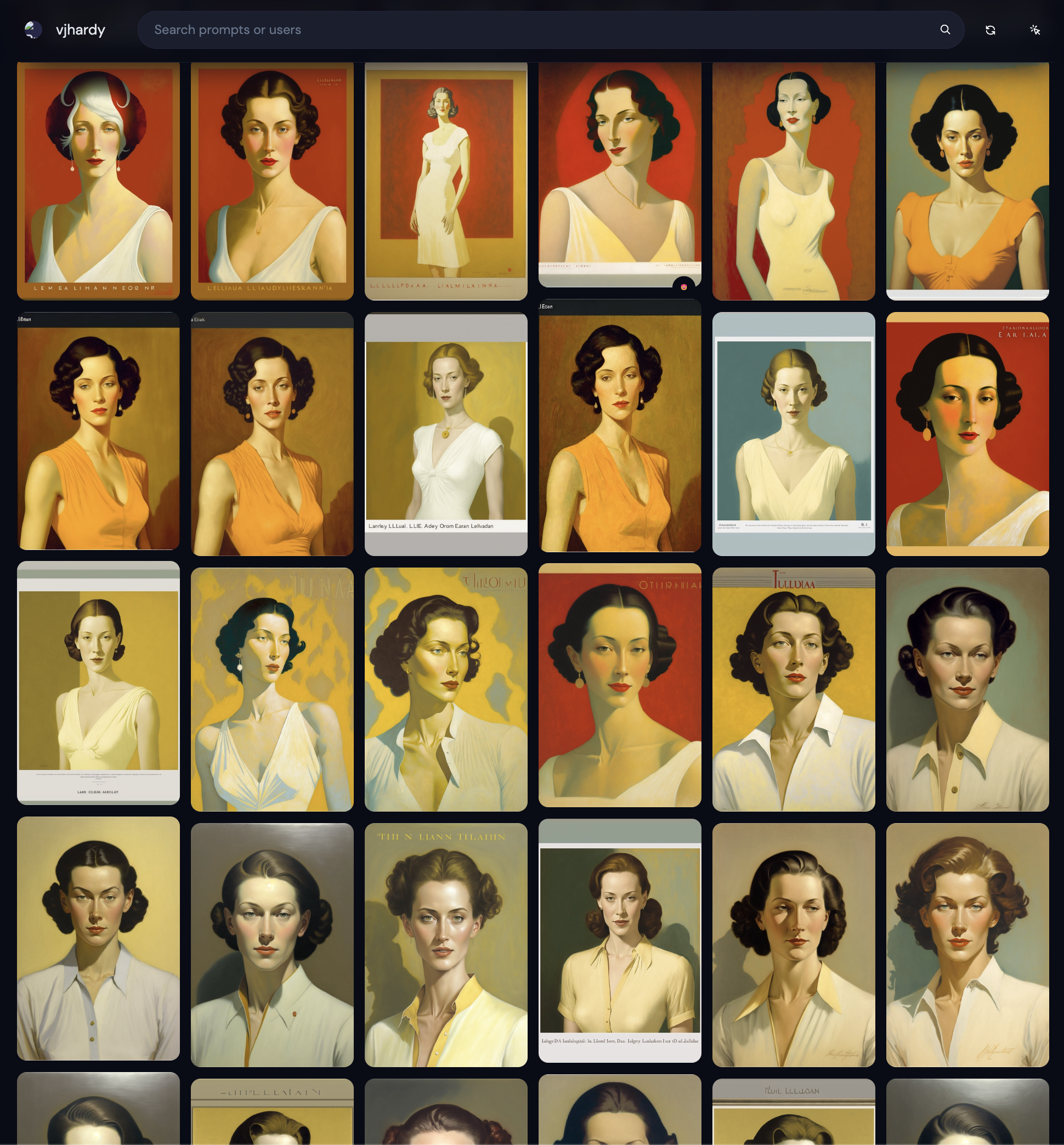

MidJourney generates images from prompts, i.e., textual descriptions of the image you want MidJourney to generate. It is fast (normally under a minute) and it is easy to iterate on prompts to try and direct the generator towards what you intend. The image below is a screen capture of multiple iterations MidJourney created as Vincent was varying and adjusting his prompts.

MidJourney generates images from prompts, i.e., textual descriptions of the image you want MidJourney to generate. It is fast (normally under a minute) and it is easy to iterate on prompts to try and direct the generator towards what you intend. The image below is a screen capture of multiple iterations MidJourney created as Vincent was varying and adjusting his prompts.

MIDJOURNEY ITERATIONS

The prompt that He settled with for this image was:

Note that the prompt contains elements describing the subject, the composition, style and even the mood.

Iterating on the prompt sometimes removes or modifies parts of the image. So Vincent often keeps an imperfect image with parts Vincent really likes since he has other ways to correct or use it. That was the case for the image in this first step: while Vincent liked the general result, he did not want the orange frame, he wanted a different hair style and he wanted to extend the lower body. My next step was to touch up the image in DALL-E.

MIDJOURNEY RAW OUTPUT

DALL-E : Creative touch ups

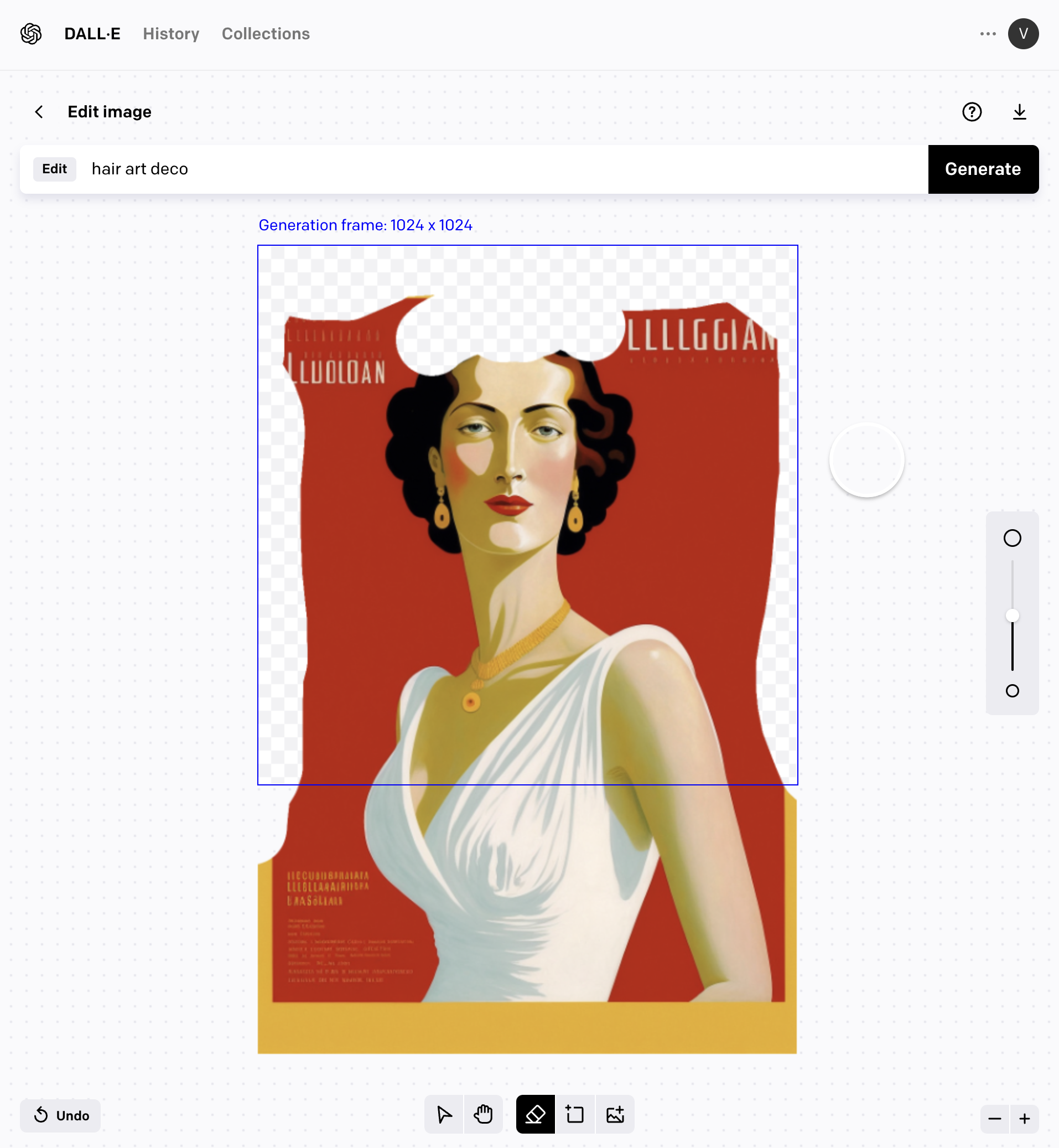

In the second image, comparing it to the MidJourney raw output, you see that Vincent used DALLE-2 to remove the bottom part of the orange frame and replace it with the lower part of the body. This is done by bringing the MidJourney output into DALL-E's editor, erasing the parts he did not like, and then directing DALL-E to fill these areas with a new prompt. This process is called 'in painting'. My prompt was simply:

In the second image, comparing it to the MidJourney raw output, you see that Vincent used DALLE-2 to remove the bottom part of the orange frame and replace it with the lower part of the body. This is done by bringing the MidJourney output into DALL-E's editor, erasing the parts he did not like, and then directing DALL-E to fill these areas with a new prompt. This process is called 'in painting'. My prompt was simply:

Vincent used the same feature with the simple prompt of hair to touch up the woman's hairdo at the top of the head and remove the white part.

DALLE-2 OUTPUT

Below is a screen capture of the simple DALL-E user interface. Note the eraser tool that allowed him clear the parts Vincent wanted to replace and the textfield at the top to enter the desired prompt, which describes what should be painted into the cleared areas.

DALLE-2 USER INTERFACE

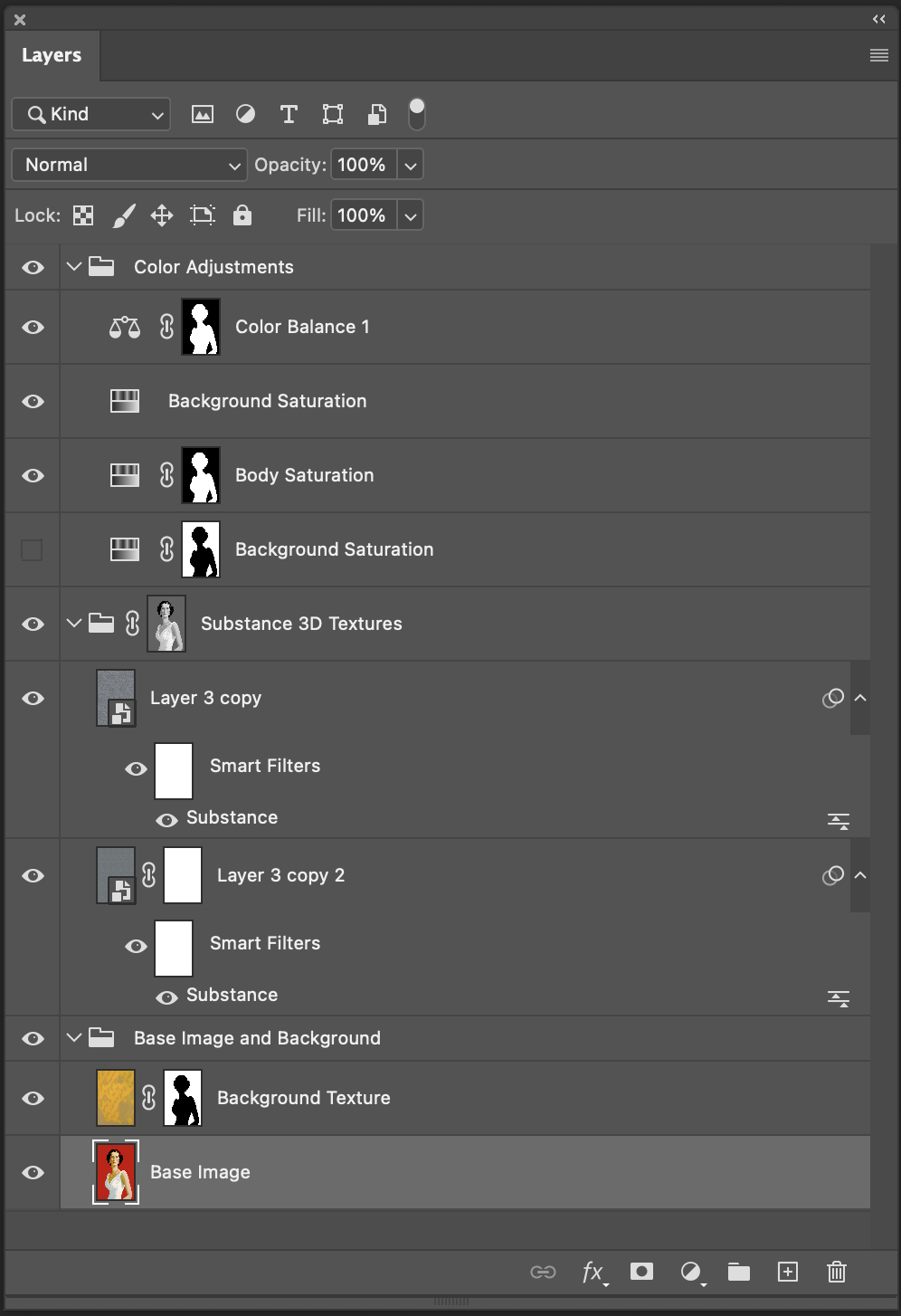

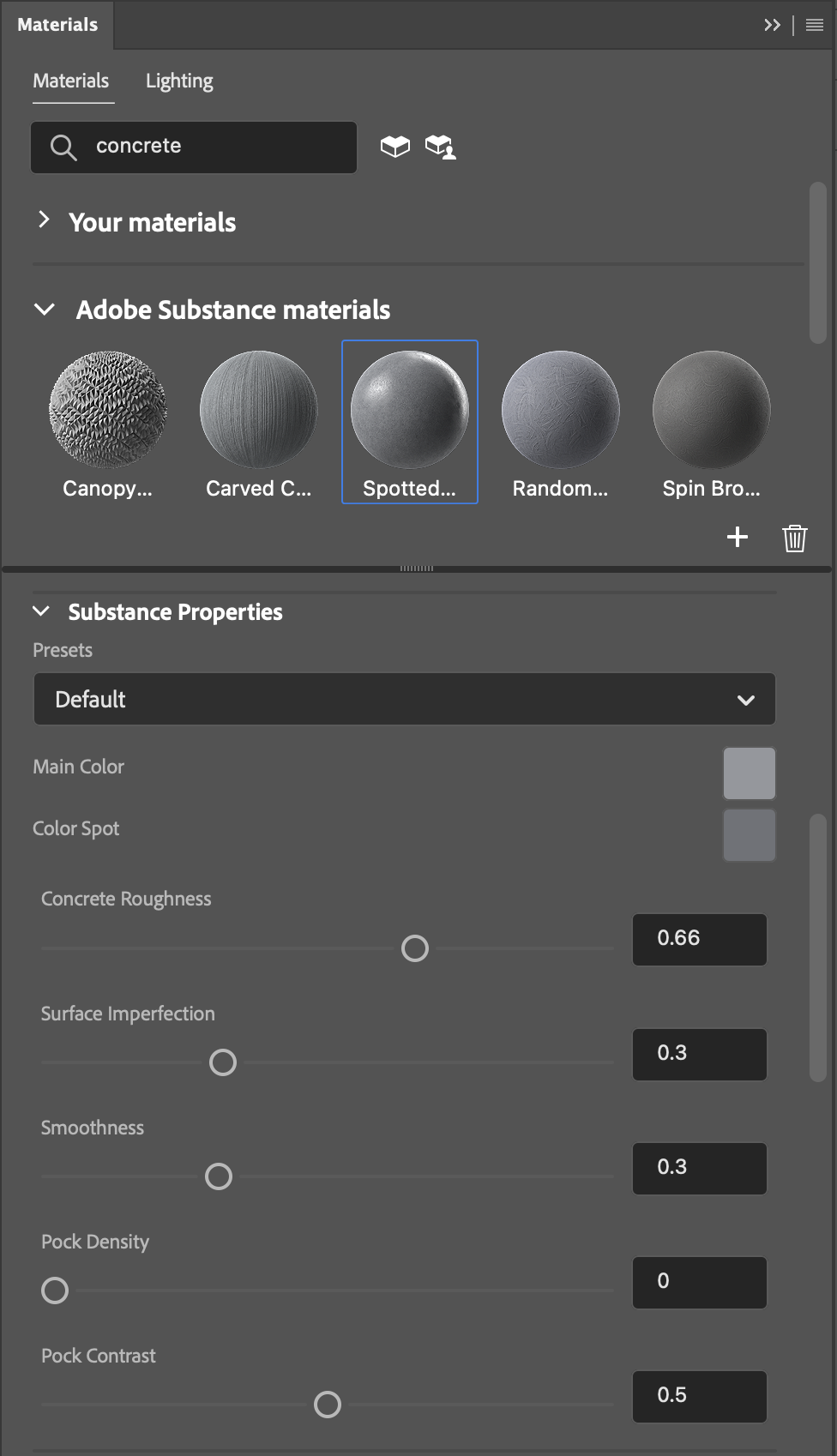

STEP 3 – Photoshop : Texturing, Upscaling and Compositing

Vincent brought the image in Photoshop and used the content aware fill feature extensively (it works great to remove things like the remainder of the frame and the undesired text over a fairly simple background), then masking and compositing to adjust the background. Finally, he used the Substance 3D materials to give the image texture. These are parametric textures (as opposed to straight images) which gives a lot of creative control. For example, Vincent uses concrete and paper textures, and Substance 3D materials give him control over parameters such as roughness, contrast, or color variations.

Vincent brought the image in Photoshop and used the content aware fill feature extensively (it works great to remove things like the remainder of the frame and the undesired text over a fairly simple background), then masking and compositing to adjust the background. Finally, he used the Substance 3D materials to give the image texture. These are parametric textures (as opposed to straight images) which gives a lot of creative control. For example, Vincent uses concrete and paper textures, and Substance 3D materials give him control over parameters such as roughness, contrast, or color variations.

PHOTOSHOP MASKING, COMPOSITING AND TEXTURING

PHOTOSHOP WITH SUBSTANCE 3D TEXTURES

PHOTOSHOP CONTENT AWARE FILL

FINAL IMAGE FROM PHOTOSHOP

Other uses of AI tools

Vincent generally uses the neural filters in Photoshop for important tasks such as:

- Upscaling with "Super Zoom". This works very well and He is using it to get a higher resolution image coming from MidJourney / DALL-E.

- "JPEG Artifacts Removal". When the images from MidJourney and DALL-E show compression artifacts.

- "Color Harmonization". When compositing images and their colors need to be harmonized.

In addition, Vincent uses the Liquify filter. That filter works great on many image types, but in particular with portraits: you can adjust face features or body position for example.